Complicated is a very different concept from Complex. Yet most of us do not distinguish them. Even more, we try to manage Complex systems with Complicated solutions. And this turns out to be a very huge problem.

A watch is complicated. It is composed of a large number of pieces; yet they are carefully engineered to fit and move together. The system is very reliable (it’s a watch!). Most engineered systems are complicated, yet reliable. The more the components fit seamlessly together, the better the reliability.

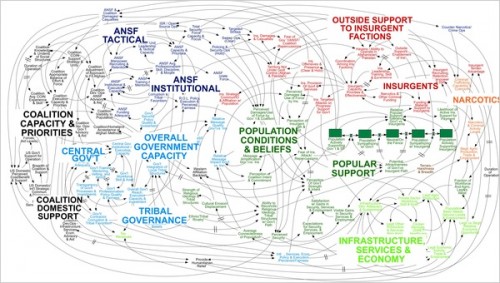

On the contrary, a complex system involves a lot of different components or contributors; they are all interconnected and inter-dependent; but they all follow a different interest, and they make the system unpredictable. The now classical slide describing the situation in Afghanistan to General McCrystal is a classical example of the depiction of a complex system.

Complex systems are unpredictable. They are what happens in real life outside what can be carefully engineered. They are what creates the unforeseen, the adventure.

Because we mix all the time those two concepts we misunderstand a lot of what is happening around us. The way to tackle and repair complicated systems is completely different from how we can influence complex systems. The way these systems fail belongs to different realms. And when a complicated system encounters unpredictable complexity, it is where our engineering capabilities are overwhelmed. It is where our certainties become shaky. It is when catastrophes like Fukushima happen.