In complex systems, actions or decisions tend to have unintended consequences. That appears to be called the ‘Cobra effect’ after a 2001 book. This is explained with many funny examples on Wikipedia (Cobra effect article) and Quartz (Cobra effect post).

The name itself stems from an attempt by the british colonial power to eradiate cobras in New Delhi. The reward policy for each snake led people to breed them and increase their number to get the rewards. And when the reward system got cancelled, all those cobras were released, which had finally the opposite effect than the one expected.

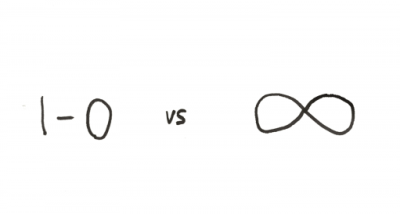

This reminds us that in complex systems with people possibly taking decisions to game the system, decisions that look straightforward can have a different or even opposite effect. Therefore we need to systematically test the effect of a decision on a smaller scale before spreading it. And sometimes the solution to a problem is not an obvious one, but rather doing something that only looks remotely connected with the problem at hand!