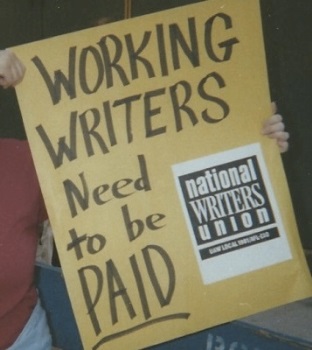

In this excellent New-York Times article ‘Does It Pay to Be a Writer?‘, the problem of writer compensation is analyzed in depth. There is a significant shift in writers’ compensation patterns at work over the last decades and faster even in the last few years.

There are less opportunities to write for a living – “Writing for magazines and newspapers was once a solid source of additional income for professional writers, but the decline in freelance journalism and pay has meant less opportunity for authors to write for pay.”

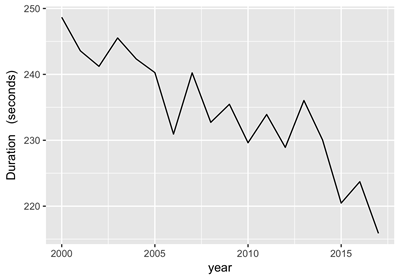

And those opportunities pay much less: “In the 20th century, a good literary writer could earn a middle-class living just writing,” said Mary Rasenberger, executive director of the Authors Guild, citing William Faulkner, Ernest Hemingway and John Cheever. Now, most writers need to supplement their income with speaking engagements or teaching. Strictly book-related income — which is to say royalties and advances — are also down, almost 30 percent for full-time writers since 2009.” A survey in the US is also quoted showing the median compensation for writers to have fallen by 40% in a few years.

Writing has thus become more of a commodity. On the other hand we also need to relativise the statement that writer wan’t make a living – historically most writers have had to supplement income with other activities, except for those writing bestsellers or being famous for some other reason. Still, income gets lower for pure writing in the gig economy and one century ago it was possible to make a decent living just writing some pieces for newspaper, which is not any more the case today.

This reinforces the observation that writing today must be part of a global package of activity – some will be journalists, professional speakers, consultants, other professionals for which writing is a way to communicate to part of the public and spread their message.