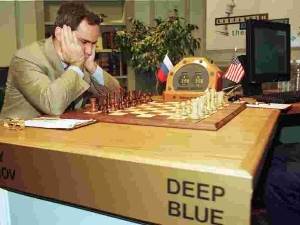

Artificial Intelligence is quite often mistaken, and that’s something we must know and understand (see for example the previous post on ‘How Deployment of Facial Recognition Creates Many Issues‘). The best example I’ve read of the problem of humans not reacting adequately is highlighted in the post ‘Pourquoi l’intelligence artificielle se trompe tout le temps’ (Why AI is always mistaken – in French) when it evokes the Kasparov vs Deep Blue chess match (recounted here in English ‘Twenty years on from Deep Blue vs Kasparov: how a chess match started the big data revolution‘)

At some stage during the game, the computer did something which looked quite stupid. And it was actually stupid, but one could although believe it was brilliantly unconventional! Kasparov was destabilized. In reality, that was actually a mistake by the AI program! “The world champion was supposedly so shaken by what he saw as the machine’s superior intelligence that he was unable to recover his composure and played too cautiously from then on. He even missed the chance to come back from the open file tactic when Deep Blue made a “terrible blunder”.”

Because of the manner in which AI gets trained, it will necessarily create a high ratio of mistakes and errors when implemented. The challenge is for us to identify those occasions and not get destabilized by them.

First, we should probably get a systematic warning associated with the AI output about the possibility of a mistake. And, we should remain conscious and critically aware of the possibility of a mistake by running some simple checks for the adequacy of the output.

This high error rate of AI is a problem for high reliability applications of course, and we should also see some emergence of techniques to correct this problem or provide technological checks and balances to avoid inadvertent mistakes that could have actual consequences.

Still just knowing that AI is prone to making mistakes is something important we need to recognise and be able to respond to.