This Atlantic paper ‘the Internet original sin‘ provides a reminder of the damaging effect of a web funded by advertising. It proposes, as many papers have proposed before, an alternate funding model.

“Advertising became the default business model on the web, “the entire economic foundation of our industry,” because it was the easiest model for a web startup to implement, and the easiest to market to investors. Web startups could contract their revenue growth to an ad network and focus on building an audience.”

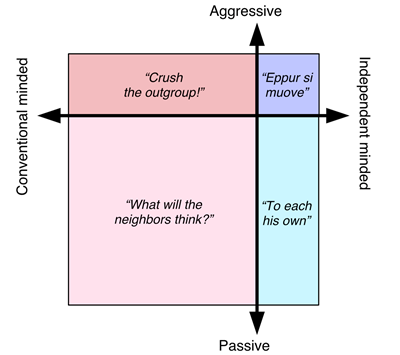

Of course funding through advertising has led to some effects that are not quite positive: development of means to defeat search algorithms, need to pay to get promoted anywhere, and the most important, the trend by social networks to increase stickiness by making sure you only see stuff that conforms to your worldview.

I am not sure however that we should blame advertising so much. Historically, newspapers, radio and TV stations have also been mainly funded through advertising. This is not new. What is new is the power of digital to leverage advertising to a new level of personalization, up to showing a personal view of the internet to each user; and that the market for advertisement has now become global. In the case of newspaper, radio and TV, regulations have been introduced to allow a balanced approach to what was being broadcast. That’s probably what is missing for internet now.

It may be difficult to introduce regulations because they need to be global and internet has become a playground for power ambitions, but it is definitely possible to impose regulations nationally or by region, and that’s what should be done.