We know that emotions is a substantial source of decisions and actions for humans. Being able to identify that emotion is an essential technology that currently becomes mature, and I was not really aware of the extent of progress in that area. An excellent summary is given in this Quartz Obsession Post ‘Sentiment analysis‘.

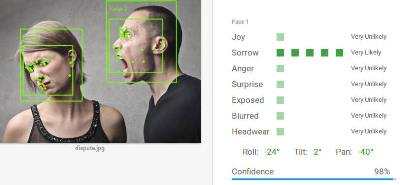

More and more companies use sentiment analysis to drive their decisions about communication. The first applications were sentiment analysis based on social network posts, crudely based on the types of words people were using. Today automated sentiment analysis applies to images and videos – emotions can be detected from selfies and pictures and even from micro-expressions in videos, adding a lot of context and more subtle ways to detect the emotional condition of people.

It goes further – for example affective computing is “Affective Computing is computing that relates to, arises from, or deliberately influences emotion or other affective phenomena” (see the affective computing MIT group page). It clearly states here that we are at the stage where computing will start influencing emotions. Or at least, detecting our emotional state and propose to change it. This can be potentially dangerous, and this space needs to be watched closely. Social networks and brands will now not only inadvertently influence our emotions, but probably also voluntarily!